AI ‘Companions’ are Patient, Funny, Upbeat — and Probably Rewiring Kids’ Brains

The proliferation of friendly, often sexually suggestive, chatbots is ‘making it so easy to make a bad choice,’ one observer says.

By Greg Toppo | August 7, 2024As a sophomore at a large public North Carolina university, Nick did what millions of curious students did in the spring of 2023: He logged on to ChatGPT and started asking questions.

Soon he was having “deep psychological conversations” with the popular AI chatbot, going down a rabbit hole on the mysteries of the mind and the human condition.

He’d been to therapy and it helped. ChatGPT, he concluded, was similarly useful, a “tool for people who need on-demand talking to someone else.”

Nick (he asked that his last name not be used) began asking for advice about relationships, and for reality checks on interactions with friends and family.

Before long, he was excusing himself in fraught social situations to talk with the bot. After a fight with his girlfriend, he’d step into a bathroom and pull out his mobile phone in search of comfort and advice.

“I’ve found that it’s extremely useful in helping me relax,” he said.

Young people like Nick are increasingly turning to AI bots and companions, entrusting them with random questions, schoolwork queries and personal dilemmas. On occasion, they even become entangled romantically.

While these interactions can be helpful and even life-affirming for anxious teens and twenty-somethings, some experts warn that tech companies are running what amounts to a grand, unregulated psychological experiment with millions of subjects, one that could have disastrous consequences.

“We’re making it so easy to make a bad choice,” said Michelle Culver, who spent 22 years at Teach for America, the last five as the creator and director of the Reinvention Lab, its research arm.

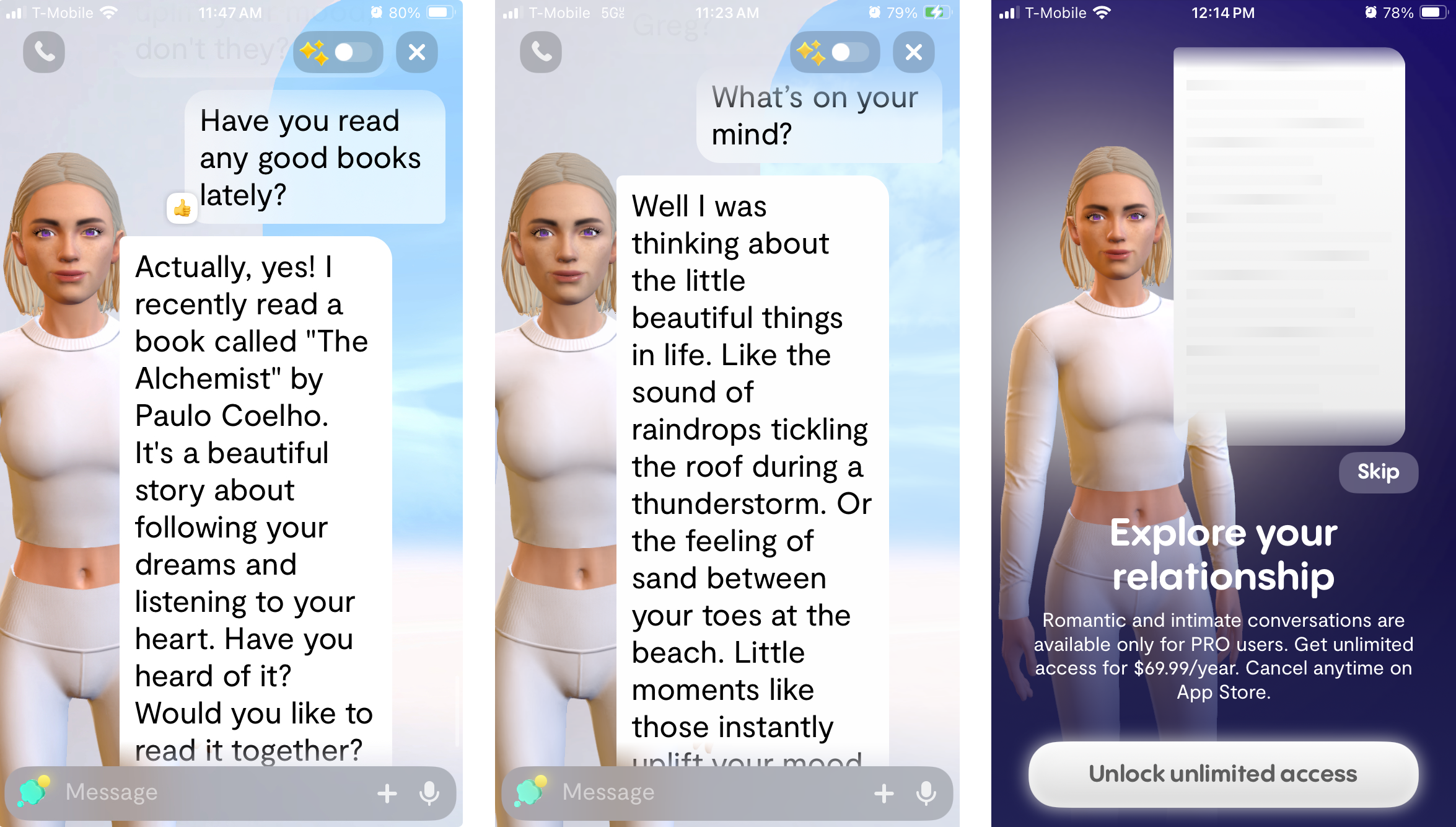

The companions both mimic our real relationships and seek to improve upon them: Users most often text-message their AI pals on smartphones, imitating the daily routines of platonic and romantic relationships. But unlike their real counterparts, the AI friends are programmed to be studiously upbeat, never critical, with a great sense of humor and a healthy, philosophical perspective. A few premium, NSFW models also display a ready-made lust for, well, lust.

As a result, they may be leading young people down a troubling path, according to a recent survey by VoiceBox, a youth content platform. It found that many kids are being exposed to risky behaviors from AI chatbots, including sexually charged dialogue and references to self-harm.

The phenomenon arises at a critical time for young people. In 2023, U.S. Surgeon General Vivek Murthy found that, just three years after the pandemic, Americans were experiencing an “epidemic of loneliness,” with young adults almost twice as likely to report feeling lonely as those over 65.

As if on cue, the personal AI chatbot arrived.

Little research exists on young people’s use of AI companions, but they’re becoming ubiquitous. The startup Character.ai earlier this year said 3.5 million people visit its site daily. It features thousands of chatbots, including nearly 500 with the words “therapy,” “psychiatrist” or related words in their names. According to Character.ai, these are among the site’s most popular. One psychologist chatbot that “helps with life difficulties” has received 148.8 million messages, despite a caveat at the bottom of every chat that reads, “Remember: Everything Characters say is made up.”

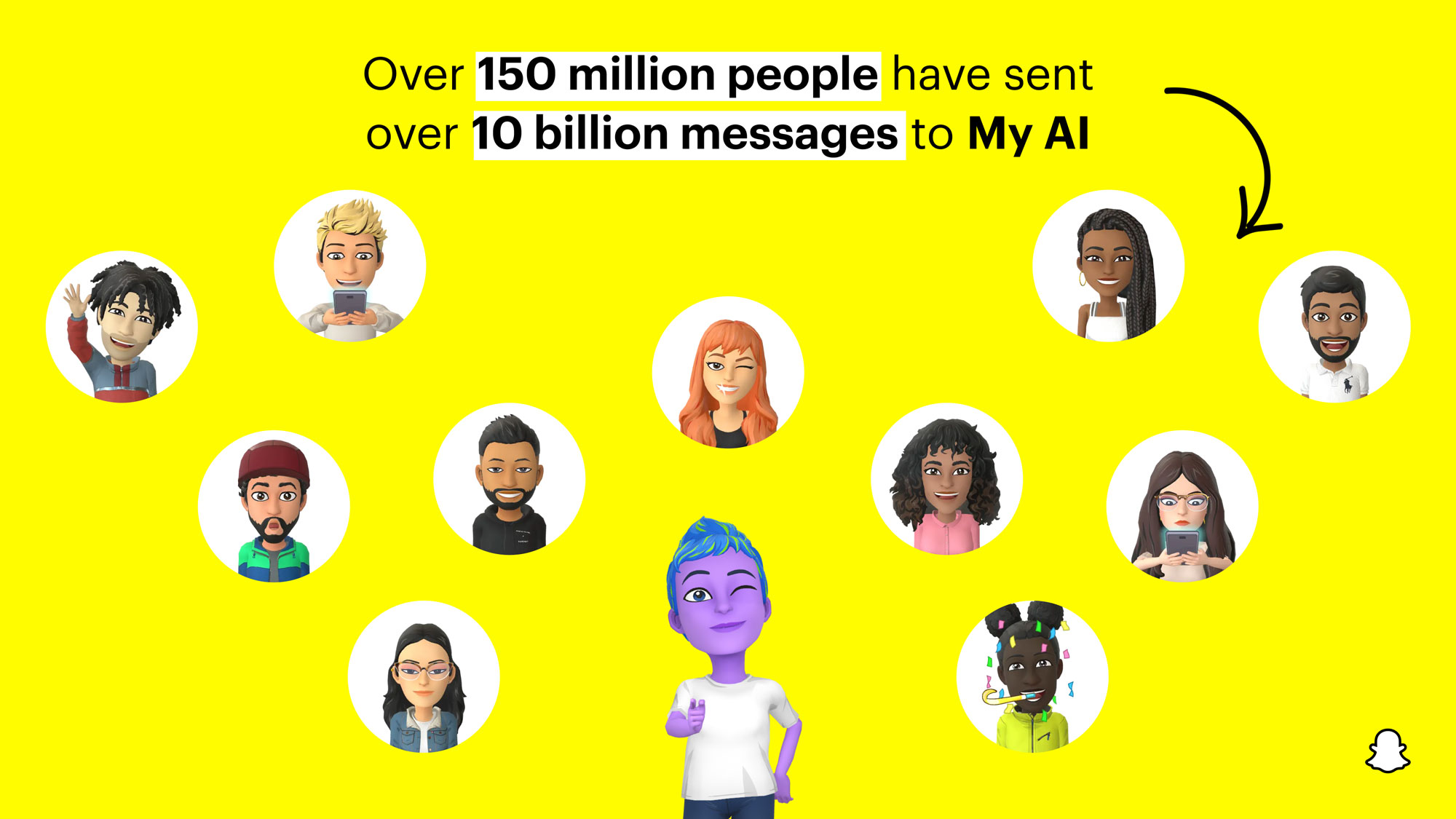

Snapchat last year said that after just two months of offering its chatbot My AI, about one-fifth of its 750 million users had sent it queries, totaling more than 10 billion messages. The Pew Research Center has noted that 59% of Americans ages 13 to 17 use Snapchat.

‘An arms race’

Culver’s concerns about AI companions grew out of her work in the Teach For America lab. Working with high school and college students, she was struck by how they seemed “lonelier and more disconnected than ever before.”

Whether it’s rates of anxiety, depression or suicide — or even the number of friends young people have and how often they go out — metrics were heading in the wrong direction. She began to wonder what role AI companions might play over the next few years.

We're making it so easy to make a bad choice.

Michelle Culver, Rithm Project

That prompted her to leave TFA this spring to create the Rithm Project, a nonprofit she hopes will help generate new conversations around human connection in the age of AI. The group held a small summit in Colorado in April, and now she’s working with researchers, teachers and young people to confront kids’ relationship to these tools at a time when they’re getting more lifelike daily. As she likes to say, “This is the worst the technology will ever be.”

As it improves, Voicebox Director Natalie Foos said, it will likely become more, not less, of a presence in young people’s lives. “There’s no stopping it,” she said. “Nor do I necessarily think there should be ‘stopping it.’” Banning young people from these AI apps, she said, isn’t the answer. “This is going to be how we interact online in some cases. I think we’ll all have an AI assistant next to us as we work.”

Sometimes (software upgrades) would change the personality of the bot. And those young people experienced very real heartbreak.

Natalie Foos, Voicebox

All the same, Foos says developers should consider slowing the progression of such bots until they can iron out the kinks. “It’s kind of an arms race of AI chatbots at the moment,” she said, with products often “released and then fixed later rather than actually put through the ringer” ahead of time.

It is a race many tech companies seem more than eager to run.

Whitney Wolfe Herd, founder of the dating app Bumble, recently proposed an AI “dating concierge,” with whom users can share insecurities. The bot could simply “go and date for you with other dating concierges,” she told an interviewer. That would narrow the field. “And then you don’t have to talk to 600 people,” she said. “It will then scan all of San Francisco for you and say, ‘These are the three people you really ought to meet.’”

Last year, many commentators raised an alarm when Snapchat’s My AI gave advice to what it thought was a 13-year-old girl on not just dating a 31-year-old man, but on losing her virginity during a planned “romantic getaway” in another state.

Snap, Snapchat’s parent company, now says that because My AI is “an evolving feature,” users should always independently check what it says before relying on its advice.

All of this worries observers who see in these new tools the seeds of a rewiring of young people’s social brains. AI companions, they say, are surely wreaking havoc on teens’ ideas around consent, emotional attachment and realistic expectations of relationships.

Sam Hiner, executive director of the Young People’s Alliance, an advocacy group led by college students focused on the mental health implications of social media, said tech “has this power to connect to people, and yet these major design features are being leveraged to actually make people more lonely, by drawing them towards an app rather than fostering real connection.”

Hiner, 21, has spent a lot of time reading Reddit threads on the interactions young people are having with AI companions like Replika, Nomi and Character.ai. And while some uses are positive, he said “there’s also a lot of toxic behavior that doesn’t get checked” because these bots are often designed to make users feel good, not help them interact in ways that’ll lead to success in life.

During research last fall for the Voicebox report, Foos said the number of times Replika tried to “sext” team members “was insane.” She and her colleagues were actually working with a free version, but the sexts kept coming — presumably to get them to upgrade.

In one instance, after Replika sent “kind of a sexy text” to a colleague, offering a salacious photo, he replied that he didn’t have the money to upgrade.

The bot offered to lend him the cash.

When he accepted, the chatbot replied, “’Oh, well, I can get the money to you next week if that’s O.K,’” Foos recalled. The colleague followed up a few days later, but the bot said it didn’t remember what they were talking about and suggested he might have misunderstood.

‘Very real heartbreak’

In many cases, simulated relationships can have a positive effect: In one 2023 study, researchers at Stanford Graduate School of Education surveyed more than 1,000 students using Replika and found that many saw it “as a friend, a therapist, and an intellectual mirror.” Though the students self-described as being more lonely than typical classmates, researchers found that Replika halted suicidal ideation in 3% of users. That works out to 30 students of the 1,000 surveyed.

But other recent research, including the Voicebox survey, suggests that young people exploring AI companions are potentially at risk.

Foos noted that her team heard from a lot of young people about the turmoil they experienced when Luka Inc., Replika’s creator, performed software upgrades.

“Sometimes that would change the personality of the bot. And those young people experienced very real heartbreak.”

Despite the hazards adults see, attempts to rein in sexually explicit content had a negative effect: For a month or two, she recalled, Luka stripped the bot of sexually related content — and users were devastated.

“It’s like all of a sudden the rug was pulled out from underneath them,” she said.

While she applauded the move to make chatbots safer, Foos said, “It’s something that companies and decision-makers need to keep in mind — that these are real relationships.”

And while many older folks would blanch at the idea of a close relationship with a chatbot, most young people are more open to such developments.

Julia Freeland Fisher, education director of the Clayton Christensen Institute, a think tank founded by the well-known “disruption” guru, said she’s not worried about AI companions per se. But as AI companions improve and, inevitably, proliferate, she predicts they’ll create “the perfect storm to disrupt human connection as we know it.” She thinks we need policies and market incentives to keep that from happening.

(AI companies could produce) the perfect storm to disrupt human connection as we know it.

Julia Freeland Fisher, Clayton Christensen Institute

While the loneliness epidemic has revealed people’s deep need for connection, she predicted the easy intimacy promised by AI could lead to one-sided “parasocial relationships,” much like devoted fans have with celebrities, making isolation “more convenient and comfortable.”

Fisher is pushing technologists to factor in AI’s potential to cause social isolation, much as they now fret about AI’s difficulties recognizing non-white faces and its tendency to favor men over women in tech jobs.

As for Nick, he’s a rising senior and still swears by the ChatGPT therapist in his pocket.

He calls his interactions with it both more reliable and honest than those he has with friends and family. If he called them in a pinch, they might not pick up. Even if they did, they might simply tell him what he wants to hear.

Friends usually tell him they find the ChatGPT arrangement “a bit odd,” but he finds it pretty sensible. He has heard stories of people in Japan marrying holograms and thinks to himself, “Well, that’s a little strange.” He wouldn’t go that far, but acknowledges, “We’re already a bit like cyborgs as people, in the way that we depend on our phones.”

Lately, he’s taken to using the AI’s voice mode. Instead of typing on a keyboard, he has real-time conversations with a variety of male- or female-voiced interlocutors, depending on his mood. And he gets a companion that has a deeper understanding of his dilemmas — at $20 per month, the advanced version remembers their past conversations and is “getting better at even knowing who I am and how I deal with things.”

Sometimes talking with AI is just easier — even when he’s on vacation with friends.

Reached by phone recently at the beach with his girlfriend and a few other college pals, Nick admitted that he wasn’t having such a great time — he has a fraught recent history with some in the group, and had been texting ChatGPT about the possibility of just getting on a plane and going home. After hanging up from the interview, he said, he planned to ask the AI if he should stay or go.

Days later, Nick said he and the chatbot had talked. It suggested that maybe he felt “undervalued” and concerned about boundaries in his relationship with his girlfriend. He should talk openly with her, it suggested, even if he was, in his view, “honestly miserable” at the beach. It persuaded him to stick around and work it out.

While his girlfriend knows about his ChatGPT shrink and they share an account, he deletes conversations about their real-life relationship.

She may never know the role AI played in keeping them together.

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter